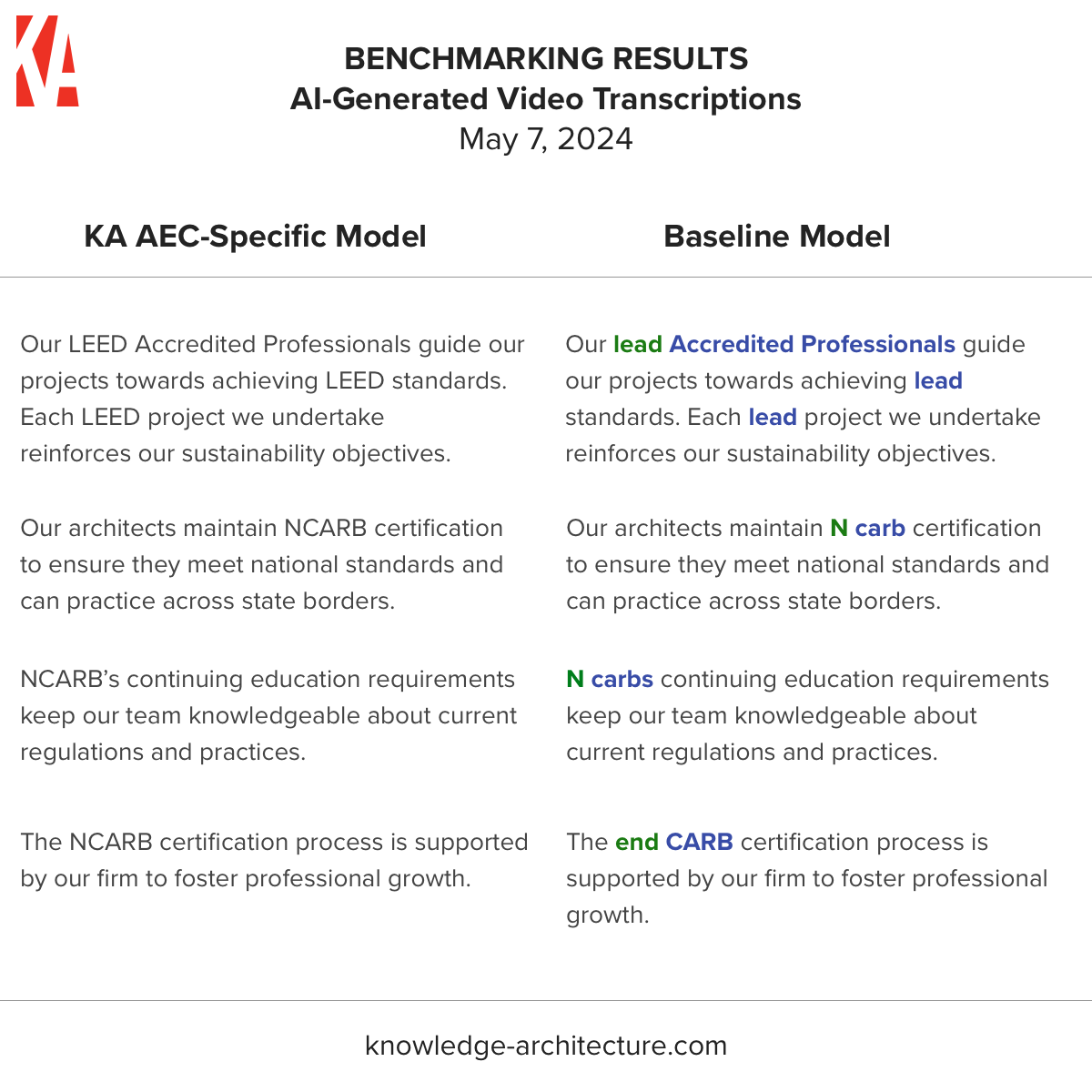

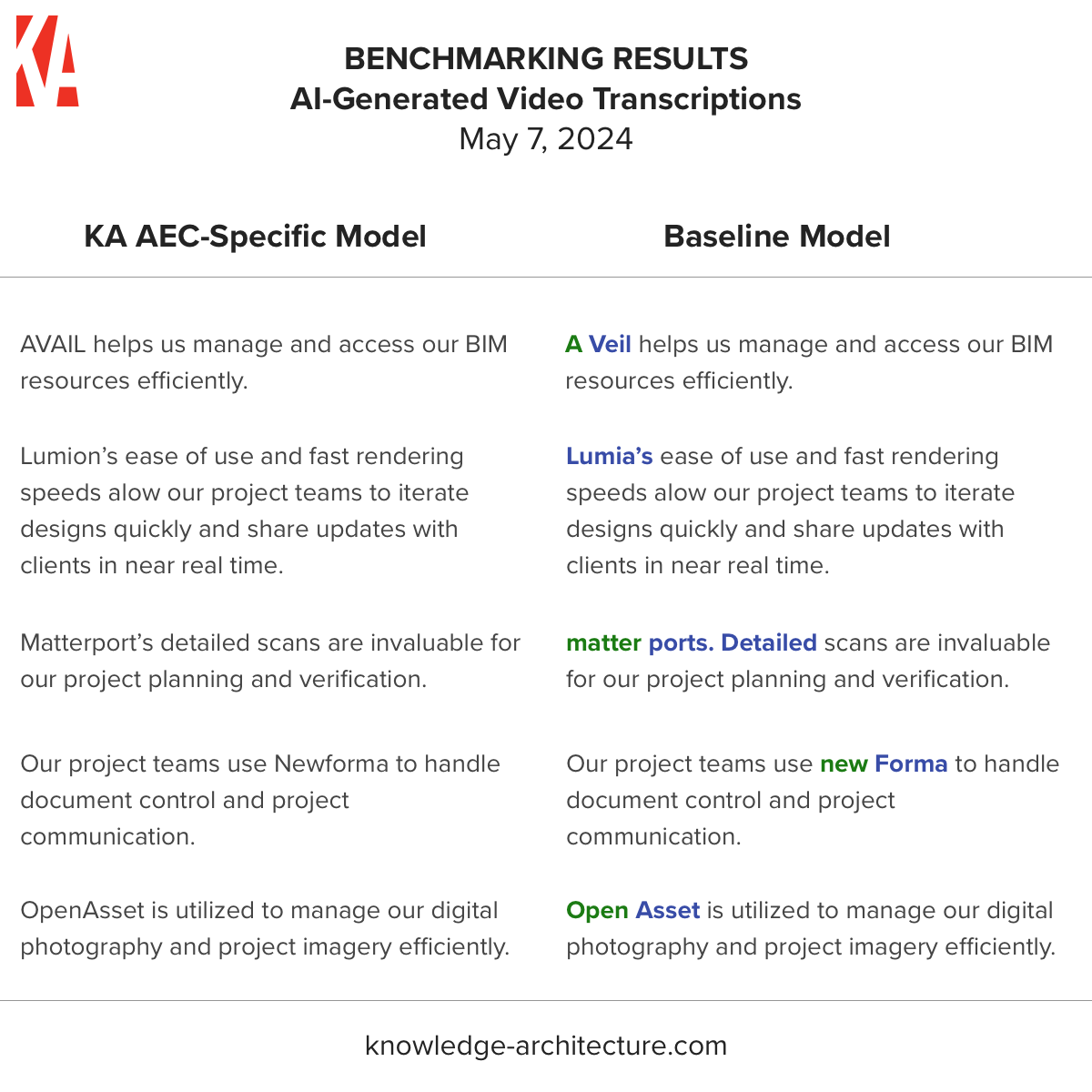

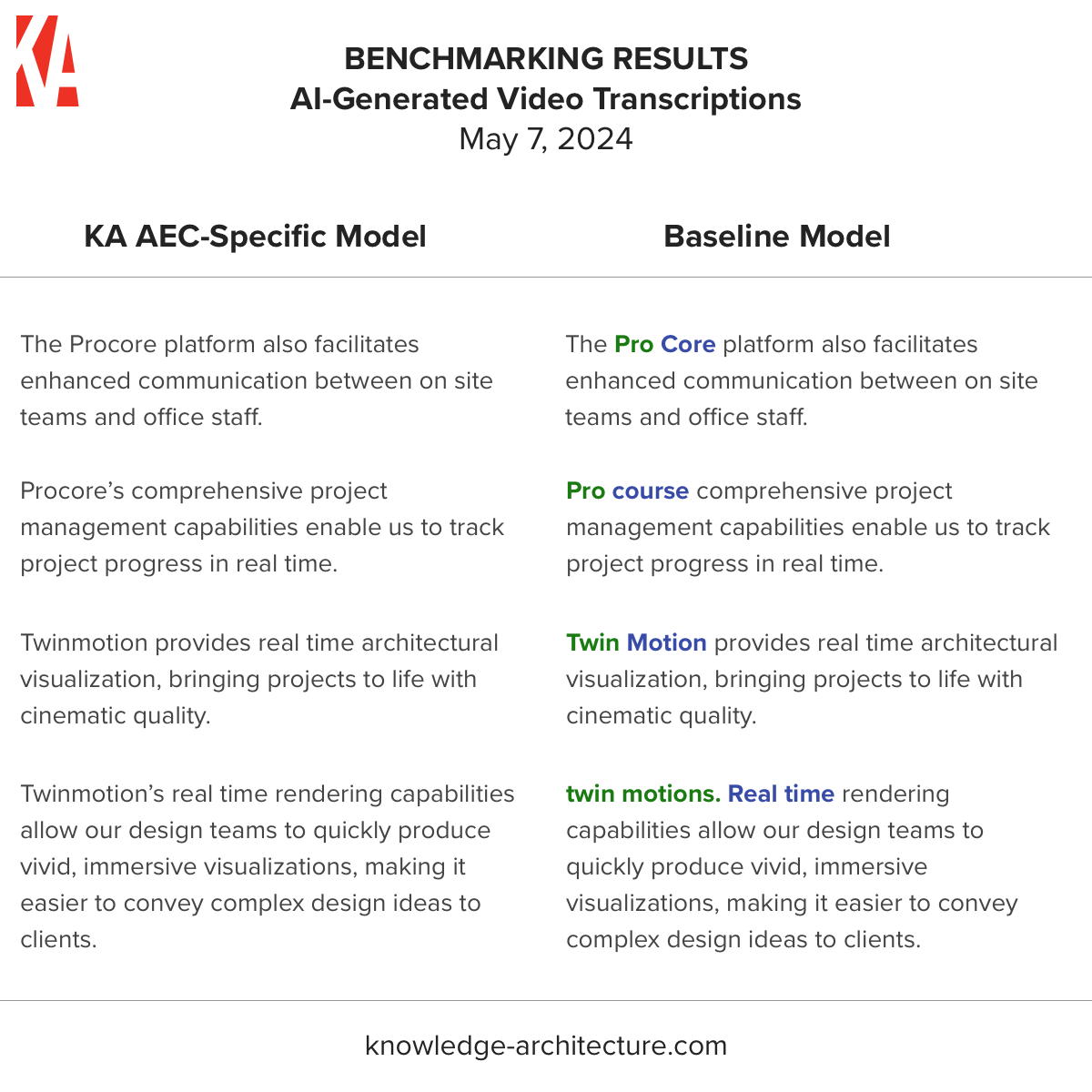

We've been building an AEC-specific AI model for video transcriptions for the last several months. We ran the first benchmarking of the first generation of this model yesterday against a baseline transcription model from Microsoft Azure.

One of the areas the KA-built AEC-specific model really shines so far is acronyms and product names, and I've shared some of the highlights in the attached images.

We've partnered with our clients to build this model (and we have another on the way) through our Community AI program, an opt-in program that will empower Knowledge Architecture to create AEC-specific AI Infrastructure by training AI models on client content for the mutual benefit of participating firms, while also protecting confidentiality and intellectual property.

Once released into a public beta in June as part of our Synthesis Video 2 update, the AEC-specific video transcription model should help our clients make videos both more accessible and searchable on their intranets, as well as save our intranet champions hours and hours of editing video captions.

We're really excited to share this milestone progress update with all of you and will keep you posted as we approach public beta.

To learn more about our product roadmap, including Community AI, Synthesis Video 2, Next Generation Search, and Synthesis LMS, please visit our roadmap page.

505 Montgomery Street, Suite 1100

San Francisco, CA, 94111

415.523.0410

We help architects and engineers find, share, and manage knowledge.

Your Custom Text Here